To Share is to Show You Care!

In the rapidly evolving field of artificial intelligence, interpreting results accurately is crucial to harnessing its full potential. However, this task often poses challenges due to the complexity of AI models and data. Fear not! In this comprehensive guide, we will walk you through the best strategies to master AI result interpretation and unveil the hidden truths within your data.

Understanding the Importance of AI Result Interpretation

Highlighting the significance of accurate result interpretation in maximizing AI’s impact. Exploring common roadblocks that lead to confusion and misinterpretation.

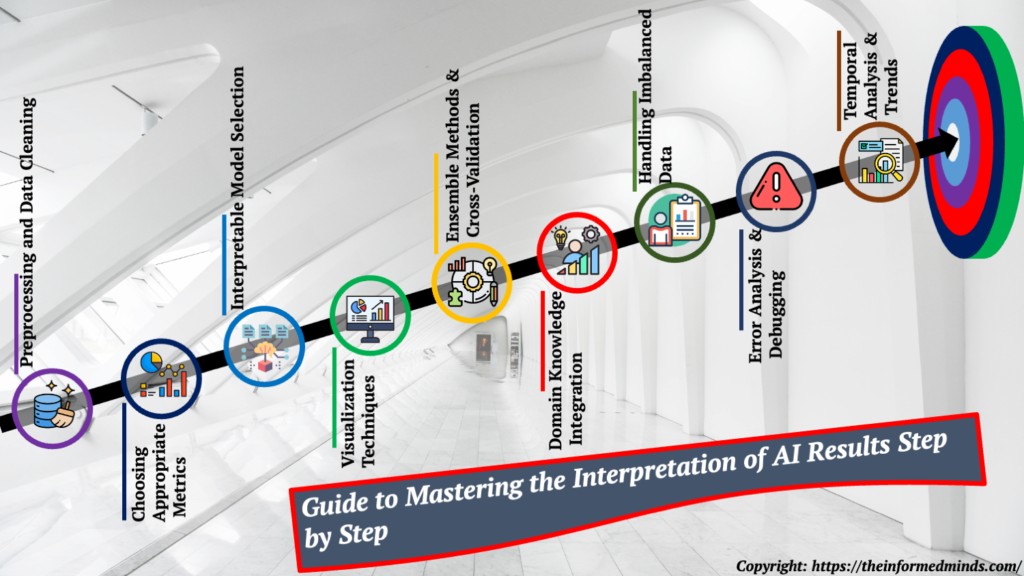

Step-by-Step Guide to Master AI Result Interpretation

1. Preprocessing and Data Cleaning

- The vital role of clean and well-structured data in accurate result interpretation.

- Techniques to identify and rectify data anomalies, outliers, and missing values.

- The vital role of clean and well-structured data in accurate result interpretation.

- Techniques to identify and rectify data anomalies, outliers, and missing values.

- Exploring data normalization, standardization, and handling categorical variables.

2. Choosing Appropriate Metrics

- Selecting metrics aligned with your AI model’s objectives.

- Diving into metrics like precision, recall, F1-score, and more, and their real-world implications.

- Selecting metrics aligned with your AI model’s objectives.

- Diving into metrics like precision, recall, F1-score, and more, and their real-world implications.

- Understanding the trade-offs between different metrics and their impact on decision-making.

3. Interpretable Model Selection

- Opting for models that offer transparency and explainability.

- Exploring decision trees, linear models, and techniques like LIME and SHAP for model interpretation.

- Opting for models that offer transparency and explainability.

- Exploring decision trees, linear models, and techniques like LIME and SHAP for model interpretation.

- Comparing the pros and cons of different interpretable models to choose the best fit for your task.

4. Visualization Techniques

- Leveraging visualizations to comprehend complex AI results.

- Heatmaps, feature importance plots, and activation maps for intuitive interpretation.

- Leveraging visualizations to comprehend complex AI results.

- Heatmaps, feature importance plots, and activation maps for intuitive interpretation.

- Exploring interactive visualization tools and libraries to enhance result understanding.

5. Ensemble Methods and Cross-Validation

- Utilizing ensemble models for robust interpretations.

- Employing cross-validation to assess model generalization and stability.

- Utilizing ensemble models for robust interpretations.

- Employing cross-validation to assess model generalization and stability.

- Exploring bagging, boosting, and stacking techniques to improve result reliability.

6. Domain Knowledge Integration

- Incorporating domain expertise to contextualize AI results.

- Balancing statistical findings with practical insights.

- Incorporating domain expertise to contextualize AI results.

- Balancing statistical findings with practical insights.

- Collaborating with domain experts to validate interpretations and refine models.

7. Handling Imbalanced Data

- Strategies for dealing with imbalanced datasets and skewed class distributions.

- Techniques like oversampling, under sampling, and Synthetic Minority Over-sampling Technique (SMOTE).

- Strategies for dealing with imbalanced datasets and skewed class distributions.

- Techniques like oversampling, under sampling, and Synthetic Minority Over-sampling Technique (SMOTE).

- Evaluating the impact of imbalanced data on result interpretation and considering the appropriate approach.

8. Error Analysis and Debugging

- Conducting thorough error analysis to identify patterns of misclassification or unusual behaviors.

- Debugging techniques to trace issues back to their root causes and refine your AI model.

- Conducting thorough error analysis to identify patterns of misclassification or unusual behaviors.

- Debugging techniques to trace issues back to their root causes and refine your AI model.

- Utilizing tools like confusion matrices, error plots, and misclassification reports for in-depth analysis.

9. Temporal Analysis and Trends

- Uncovering temporal patterns and trends in AI results over time.

- Utilizing time series analysis and forecasting to anticipate future outcomes.

- Uncovering temporal patterns and trends in AI results over time.

- Utilizing time series analysis and forecasting to anticipate future outcomes.

- Incorporating external factors and events that may influence the interpretation of temporal data.

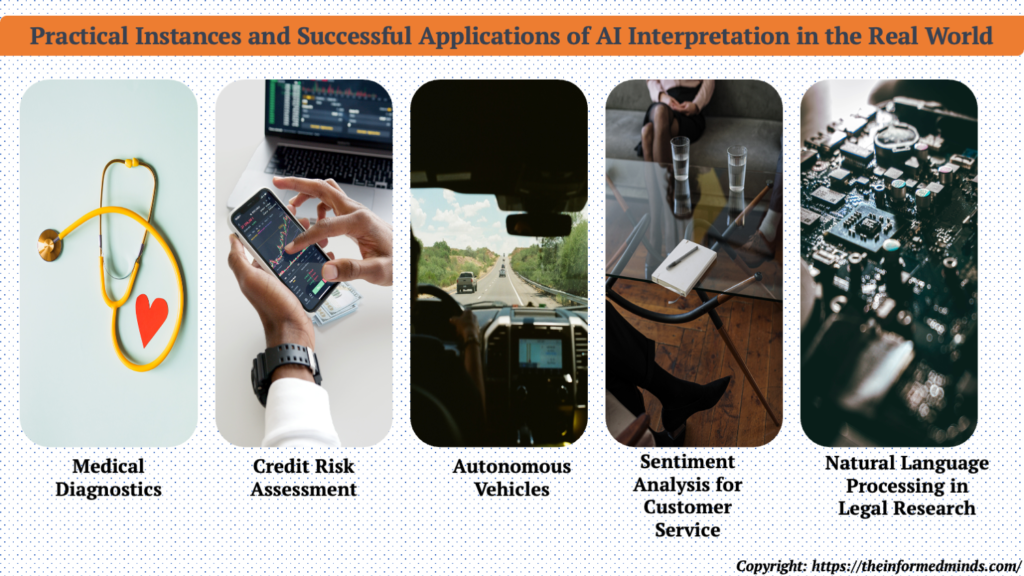

Real-World Examples and Case Studies

Showcasing practical scenarios where AI result interpretation played a pivotal role,

1. Medical Diagnostics

In medical diagnostics, AI models can predict diseases from medical images or patient data. Proper interpretation of the AI’s predictions helps doctors understand the basis for a diagnosis. For instance, in interpreting an AI’s identification of a tumor in a medical image, doctors need to know which features the AI used to make its prediction, aiding in decision-making about treatment strategies.

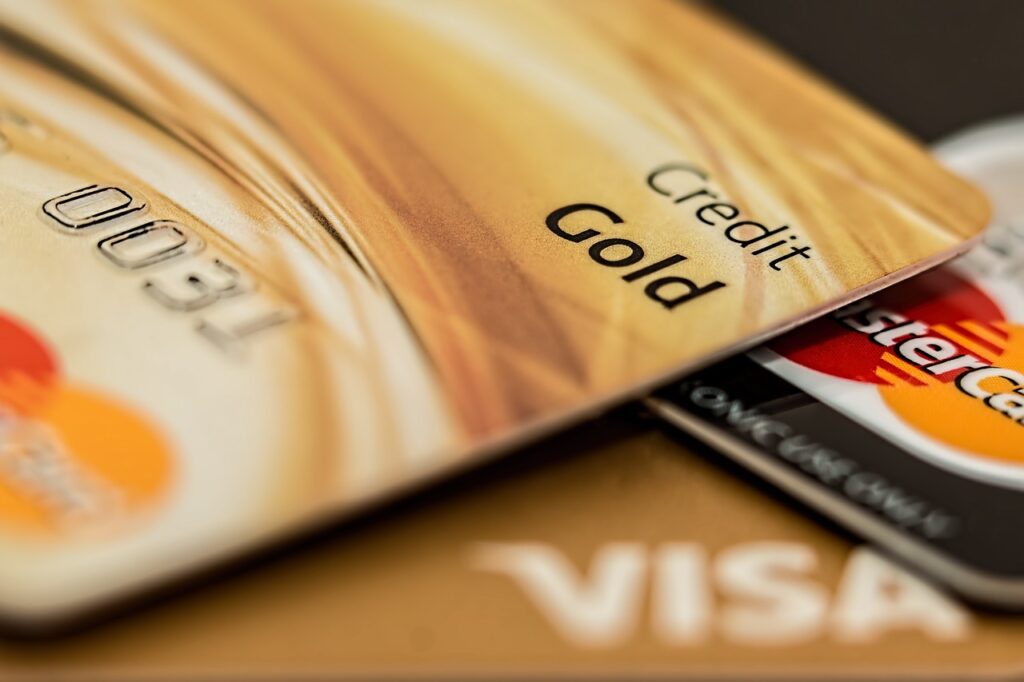

2. Credit Risk Assessment

In the financial sector, AI models are used to assess credit risk for loan applicants. Interpretation of the model’s decisions is vital to ensure that the rejection or approval of loans is transparent and fair. Understanding the key factors influencing the model’s prediction allows financial institutions to explain their decisions to applicants and regulators.

3. Autonomous Vehicles

AI-powered autonomous vehicles rely on complex models to navigate and make split-second decisions. Interpretation of these decisions is critical for safety and accountability. Understanding why an autonomous vehicle took a particular action (e.g., changing lanes, slowing down) is crucial in case of accidents or system failures.

4. Sentiment Analysis for Customer Service

Businesses use AI-powered sentiment analysis to gauge customer sentiments from reviews and feedback. Accurate interpretation of sentiment predictions helps companies understand customer perceptions, identify areas of improvement, and respond effectively to issues. Misinterpretation could lead to misaligned strategies and missed opportunities.

5. Natural Language Processing in Legal Research

In the legal field, AI-powered natural language processing is used to sift through large volumes of legal documents. Interpreting AI results can assist lawyers in finding relevant case precedents, statutes, and legal opinions quickly. Clear interpretation ensures that lawyers can confidently make arguments based on the AI’s findings.

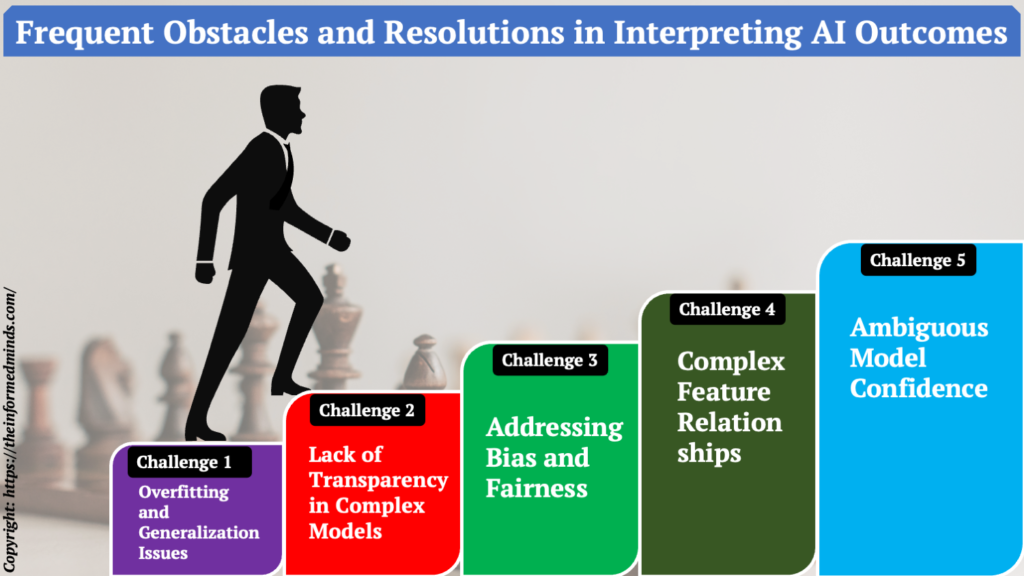

Common Challenges and Solutions

Challenge 1: Overfitting and Generalization Issues

Challenge: AI models may perform exceptionally well on training data but fail to generalize to new, unseen data, leading to misleading interpretations.

Solution: Implement techniques such as cross-validation, regularization, and dropout layers to prevent overfitting. Use techniques like learning rate annealing to find the right balance between model complexity and generalization.

Challenge 2: Lack of Transparency in Complex Models

Challenge: Complex AI models, like deep neural networks, are difficult to interpret due to their intricate internal mechanisms.

Solution: Use techniques like LIME (Local Interpretable Model-Agnostic Explanations) and SHAP (SHapley Additive exPlanations) to generate explanations for model predictions. Consider using simpler, more interpretable models like decision trees for better transparency.

Challenge 3: Addressing Bias and Fairness

Challenge: AI models can inadvertently inherit biases from training data, leading to biased results that are ethically problematic.

Solution: Conduct thorough bias analysis on training data and results. Use techniques like re-sampling, re-weighting, or adversarial training to mitigate bias. Regularly update and diversify training data to ensure fairness and inclusivity.

Challenge 4: Complex Feature Relationships

Challenge: Interpretation becomes challenging when AI models rely on intricate relationships between features that are hard to grasp.

Solution: Utilize feature importance techniques like permutation importance and SHAP values to highlight the contribution of individual features to predictions. Visualizations like partial dependence plots can help showcase how specific features influence outcomes.

Challenge 5: Ambiguous Model Confidence

Challenge: AI models often provide confidence scores or probabilities alongside predictions, but it’s unclear what these scores represent.

Solution: Calibrate model confidence scores to reflect actual prediction uncertainty. Use techniques like uncertainty estimation through Bayesian neural networks to quantify prediction reliability. Clearly communicate the meaning of confidence scores to end-users.

By addressing these common challenges with the suggested solutions, you can enhance the accuracy, transparency, and reliability of AI result interpretation. This will lead to better-informed decisions and a deeper understanding of AI model behavior.

Conclusion: Decoding Data’s Hidden Truths and Banishing Confusion Forever!

In a world increasingly driven by AI, mastering result interpretation is a skill that sets you apart. By following the strategies outlined in this guide, you can confidently navigate the complexities of AI results, uncover invaluable insights, and make informed decisions that drive success.

Remember, AI is as powerful as its interpreter. So, equip yourself with the best practices shared here, and embark on your journey to decode data’s hidden truths and banish confusion forever!

Feel free to bookmark this guide for future reference and share it with fellow AI enthusiasts who seek clarity in the midst of complexity. The realm of AI awaits – let’s interpret results like never before!

By implementing these proven techniques, you’ll be well on your way to becoming a master of AI result interpretation. Subscribe to our newsletter for more in-depth insights, practical tips, and the latest advancements in the field of artificial intelligence. Stay ahead of the curve and unlock the true potential of AI!

Frequently Asked Questions

Q1: How to interpret machine learning results?

A: Interpreting machine learning results involves analyzing model predictions, feature importance, and relationships between variables. Techniques like feature importance plots, SHAP values, and partial dependence plots help understand how inputs affect outputs.

Q2: How to master artificial intelligence?

A: To master artificial intelligence, one should study fundamental concepts like machine learning, neural networks, and natural language processing. Practice by working on projects, staying updated with advancements, and exploring AI frameworks and libraries.

Q3: How do you evaluate an AI model?

A: Evaluating an AI model involves metrics like accuracy, precision, recall, F1-score, and confusion matrices. Cross-validation, validation data, and test datasets help assess the model’s performance and its ability to generalize.

Q4: What is your interpretation of AI?

A: AI, or artificial intelligence, refers to the development of computer systems that can perform tasks that typically require human intelligence. This includes tasks like problem-solving, learning from data, recognizing patterns, and making decisions.

Q5: What are the three main evaluation levels of interpretability?

A: The three main evaluation levels of interpretability are algorithmic, global, and local. Algorithmic interpretability assesses overall model behavior, global interpretability examines model-wide insights, and local interpretability focuses on understanding individual predictions.

Q6: What are the three methods that interpret machine learning models?

A: Three methods to interpret machine learning models are model-specific interpretation (using knowledge of model’s architecture), model-agnostic interpretation (using techniques applicable to various models), and post hoc interpretation (analyzing model behavior after training).

Q7: How can I improve my AI skills?

A: Improving AI skills involves continuous learning, hands-on projects, and exploring real-world applications. Online courses, books, tutorials, and collaborating with others in the AI community contribute to skill enhancement.

Q8: Can I learn AI without coding?

A: While basic programming knowledge is beneficial, there are tools and platforms that offer graphical interfaces for building AI models without extensive coding. However, a deeper understanding of coding can provide more flexibility and control.

Q9: What programming language is used for AI?

A: Python is the most popular programming language for AI due to its extensive libraries (such as TensorFlow, PyTorch, scikit-learn) and ease of use. Other languages like R and Julia are also used in specific AI applications.

Q10: How does AI analyze data?

A: AI analyzes data using algorithms that recognize patterns, relationships, and anomalies within the data. Machine learning and deep learning techniques learn from data to make predictions or classifications, while AI-driven analytics tools extract insights from large datasets.

Q11: How do you evaluate machine learning data?

A: Evaluating machine learning data involves preprocessing steps like cleaning, normalization, and feature engineering. It also includes dividing data into training, validation, and test sets to assess model performance accurately.

Q12: How can I make my AI model more accurate?

A: To improve AI model accuracy, you can use more training data, enhance data quality, tune hyperparameters, consider different algorithms/architectures, and apply techniques like assembling. Regular validation and testing ensure continual improvement.

Q13: What are 4 types of AI?

A: The four types of AI are reactive machines (limited to specific tasks), limited memory AI (can learn from data), theory of mind AI (understands emotions/intentions), and self-aware AI (sentient and human-like).

Q14: What are the four approaches to AI?

A: The four approaches to AI are reactive machines (follow predefined rules), limited memory AI (learn from experience), theory of mind AI (understand human emotions), and self-aware AI (conscious and self-aware).

Q15: What is the difference between explanation and interpretation in AI?

A: Explanation in AI refers to providing reasons for model decisions, helping users understand “why.” Interpretation involves understanding model behavior, revealing how inputs relate to outputs, and exploring feature importance.

The Informed Minds

I'm Vijay Kumar, a consultant with 20+ years of experience specializing in Home, Lifestyle, and Technology. From DIY and Home Improvement to Interior Design and Personal Finance, I've worked with diverse clients, offering tailored solutions to their needs. Through this blog, I share my expertise, providing valuable insights and practical advice for free. Together, let's make our homes better and embrace the latest in lifestyle and technology for a brighter future.