To Share is to Show You Care!

In today’s fast-paced technological landscape, the demand for scalable Artificial Intelligence (AI) solutions has never been greater. From complex algorithms to vast datasets, the challenges faced in scaling AI are multifaceted. This article dives deep into understanding the intricacies of AI scalability and provides actionable insights to overcome its limits.

1. Introduction

1.1 Understanding AI Scalability

AI scalability refers to the ability of an AI system to handle increasing workloads efficiently. As organizations embrace AI for diverse applications, scalability becomes a pivotal factor in ensuring optimal performance.

1.2 Importance of Scalability in AI

Scalability is not just a technical concern; it directly impacts the practicality and success of AI implementations. Whether it’s processing speed, data handling, or algorithmic efficiency, scalability is the linchpin of AI functionality.

2. Challenges in AI Scalability

2.1 Limited Processing Power

One of the primary challenges in scaling AI systems is the limitation in processing power. As AI algorithms become more sophisticated, they demand substantial computational resources. In scenarios where processing power is constrained, AI applications may experience delays or compromised performance. This challenge necessitates innovative solutions to enhance processing capabilities, such as leveraging parallel processing or optimizing algorithms for efficiency.

2.2 Data Bottlenecks

The explosion of data in the digital era poses a significant hurdle in AI scalability. Handling vast datasets efficiently is crucial for AI applications, and traditional systems may struggle with the sheer volume of information. Data bottlenecks can lead to delays in processing and hinder real-time decision-making. Overcoming this challenge involves implementing robust data management strategies, including data sharding and distributed storage solutions, to ensure seamless data flow and accessibility.

2.3 Algorithmic Constraints

Not all AI algorithms are inherently scalable. Some algorithms may function well with small datasets or simple tasks but encounter limitations when tasked with more extensive datasets or complex computations. Identifying scalable algorithms is crucial in building AI systems that can grow with the demands of the application. This challenge emphasizes the need for continuous research and development to create algorithms that can handle evolving requirements without compromising efficiency.

3. Best Strategies for Scaling AI

3.1 Leveraging Cloud Computing

Cloud computing emerges as a powerful strategy to address scalability challenges in AI. Cloud platforms provide on-demand access to a vast pool of resources, including processing power, storage, and specialized AI accelerators. By utilizing cloud services, organizations can dynamically scale their AI applications based on workload fluctuations. This flexibility not only enhances performance but also optimizes cost-effectiveness, as resources are allocated precisely when needed.

3.2 Distributed Computing Architectures

To overcome the limitations of single-machine processing, implementing distributed computing architectures is paramount. Distributing computational tasks across multiple nodes or machines enables parallel processing, significantly boosting overall system performance. Technologies like Apache Hadoop and Apache Spark exemplify the success of distributed computing in handling large-scale data processing tasks. This strategy ensures that as the workload increases, the system can seamlessly scale by adding more computational nodes.

3.3 Optimizing Algorithms for Efficiency

An often overlooked but critical strategy for scaling AI involves algorithm optimization. Fine-tuning algorithms for efficiency ensure that they remain effective even when faced with increased complexity or larger datasets. This optimization process may involve streamlining code, implementing parallel processing within algorithms, or adopting advanced optimization techniques. By continuously refining algorithms, organizations can build scalable AI systems that not only meet current demands but also adapt to future challenges, making scalability an inherent feature of their AI applications.

3.4 Containerization with Kubernetes

Containerization, particularly using platforms like Kubernetes, is a game-changer in AI scalability. Containers encapsulate applications and their dependencies, providing consistency across various environments. Kubernetes orchestrates these containers, allowing for efficient deployment, scaling, and management of AI applications. This strategy enhances scalability by ensuring seamless integration, portability, and resource efficiency, contributing to a more agile and scalable AI infrastructure.

3.5 AutoML: Democratizing AI Development

AutoML (Automated Machine Learning) is revolutionizing the scalability of AI by automating the end-to-end process of building, training, and deploying machine learning models. It enables organizations with limited AI expertise to leverage sophisticated models and algorithms. AutoML platforms handle tasks such as feature engineering, model selection, and hyperparameter tuning, reducing the barrier to entry for scaling AI applications. This democratization of AI development empowers a broader audience to harness the benefits of scalable AI without extensive technical knowledge.

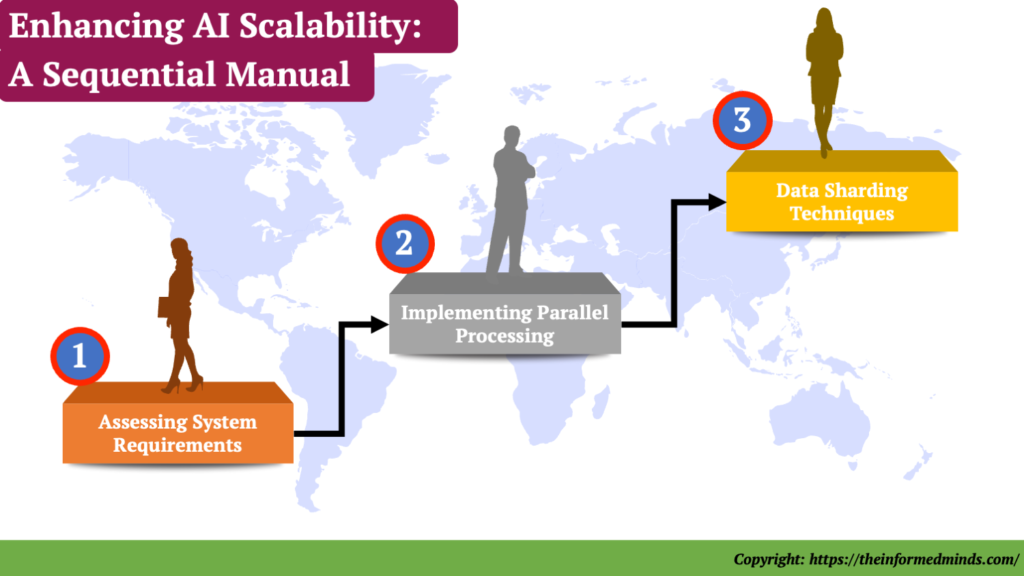

4. How to Boost AI Scalability: A Step-by-Step Guide

4.1 Assessing System Requirements

The first step in boosting AI scalability is a comprehensive assessment of system requirements. Understand the specific needs of your AI application, considering factors such as data volume, processing speed, and real-time requirements. This assessment forms the foundation for making informed decisions throughout the scalability enhancement process.

4.2 Implementing Parallel Processing

Parallel processing is a key technique to enhance AI scalability. By dividing tasks into smaller sub-tasks and processing them simultaneously, parallel processing significantly reduces computation time. Implementation may involve utilizing multi-core processors or distributed computing frameworks. Effectively implementing parallel processing ensures that as the workload grows, the system can handle it efficiently without sacrificing performance.

4.3 Data Sharding Techniques

Data sharding involves dividing large datasets into smaller, more manageable parts called shards. Each shard can then be processed independently, allowing for parallelization and efficient data retrieval. Implementing effective data sharding techniques enhances both the speed and scalability of AI applications. This step is particularly crucial when dealing with massive datasets, ensuring that the system can handle diverse data sources without becoming a bottleneck.

5. Real-World Applications of Scalable AI

5.1 E-commerce and Personalization

In the realm of e-commerce, scalable AI plays a pivotal role in providing personalized experiences to users. From product recommendations to personalized marketing campaigns, scalable AI algorithms analyze user behavior, preferences, and historical data to tailor offerings. The ability to scale ensures that as user bases grow, e-commerce platforms can continue delivering relevant and personalized content, fostering customer engagement and satisfaction.

5.2 Healthcare Predictive Analytics

Scalable AI transforms healthcare by enabling predictive analytics on vast medical datasets. From predicting disease outbreaks to identifying personalized treatment plans, scalable AI processes enormous volumes of healthcare data efficiently. As the healthcare industry embraces digitization, the scalability of AI becomes indispensable in managing, analyzing, and extracting meaningful insights from diverse healthcare datasets.

5.3 Autonomous Vehicles

The development of autonomous vehicles relies heavily on scalable AI systems. These systems process real-time data from sensors, cameras, and other sources to make split-second decisions. Scalable AI ensures that autonomous vehicles can adapt to complex and dynamic environments, making them safer and more reliable. As the automotive industry progresses towards widespread adoption of autonomous technology, scalable AI remains a critical component in achieving seamless and efficient operations.

Conclusion

The scalability of Artificial Intelligence (AI) stands as a linchpin in the ever-evolving landscape of technological innovation. This journey through the challenges and best strategies for scaling AI has underscored its transformative role in various industries.

As we navigated the challenges, including limited processing power, data bottlenecks, and algorithmic constraints, it became evident that scalability is not merely a technical concern but a critical factor that shapes the practicality and success of AI applications. Overcoming these challenges requires a holistic approach, from enhancing processing power to addressing the complexities of handling vast datasets and identifying algorithms that inherently support scalability.

The exploration of best strategies for scaling AI illuminated key pathways for organizations to unleash the full potential of their AI applications. Leveraging cloud computing emerged as a powerhouse, offering on-demand access to resources and optimizing cost-effectiveness. Distributed computing architectures, exemplified by technologies like Apache Hadoop, showcased the power of parallel processing in boosting overall system performance. Algorithm optimization, often overlooked, proved to be a fundamental strategy for ensuring efficiency and adaptability in the face of increasing complexity and data volumes.

Adding to these strategies, containerization with Kubernetes emerged as a game-changer, enhancing scalability through consistent deployment, portability, and resource efficiency. The democratization of AI development through AutoML underscored the shift towards making sophisticated AI models accessible to a broader audience, further democratizing the benefits of scalable AI.

Frequently Asked Questions

Q1: How do you overcome limitations in AI?

A: Overcoming limitations in AI involves strategies like enhancing processing power, addressing data bottlenecks, and optimizing algorithms for efficiency. Leveraging advanced technologies such as cloud computing and distributed computing architectures plays a pivotal role in expanding the capabilities of AI systems.

Q2: What are the solutions to scaling AI?

A: Solutions for scaling AI encompass leveraging cloud computing, implementing distributed computing architectures, and optimizing algorithms for efficiency. Additionally, strategies like containerization with Kubernetes and the democratization of AI development through AutoML contribute to scalable AI solutions.

Q3: How do I make AI scalable?

A: Making AI scalable involves employing strategies like leveraging cloud computing resources, implementing distributed computing architectures for parallel processing, and optimizing algorithms for efficiency. Embracing technologies like containerization with Kubernetes and adopting automated machine learning (AutoML) further enhances the scalability of AI systems.

Q4: What is scalability in AI?

A: Scalability in AI refers to the ability of an AI system to efficiently handle increasing workloads and adapt to growing demands. It involves overcoming challenges such as limited processing power, data bottlenecks, and algorithmic constraints to ensure optimal performance and responsiveness as the scale of AI applications expands.

Q5: What are the three major limitations of AI?

A: The three major limitations of AI include limited processing power, challenges posed by vast datasets leading to data bottlenecks, and the constraints associated with certain algorithms that may struggle with increased complexity or larger datasets.

Q6: What are the three limitations of AI today?

A: As of today, three limitations of AI include restricted processing power, data bottlenecks due to the exponential growth of data, and algorithmic constraints where certain AI algorithms face challenges with more extensive datasets or complex computations.

Q7: What is scaling in artificial intelligence?

A: Scaling in artificial intelligence refers to the capability of an AI system to handle increased workloads efficiently. It involves strategies like leveraging cloud computing, implementing distributed computing architectures, and optimizing algorithms to ensure optimal performance as the scale of AI applications expands.

Q8: What are the 4 main problems AI can solve?

A: AI can solve various problems, including data analysis, pattern recognition, automation of repetitive tasks, and natural language processing. These capabilities make AI a valuable tool for enhancing decision-making, efficiency, and innovation across diverse industries.

Q9: What are the different scaling strategies?

A: Different scaling strategies in AI include leveraging cloud computing resources, implementing distributed computing architectures for parallel processing, optimizing algorithms for efficiency, and embracing technologies like containerization with Kubernetes. These strategies contribute to the scalability of AI systems.

Q10: How can I make my AI more efficient?

A: Making AI more efficient involves optimizing algorithms, enhancing processing power, and implementing strategies like parallel processing. Additionally, staying abreast of technological advancements, embracing automation, and continuously refining algorithms contribute to the overall efficiency of AI systems.

Q11: Where is the scale tool in AI?

A: In the context of AI, there isn’t a specific scale tool per se. Scaling in AI is achieved through various strategies like leveraging cloud computing, distributed architectures, and algorithm optimization. The choice of tools depends on the specific needs and challenges of the AI application.

Q12: How do you make something scalable?

A: Making something scalable involves designing systems or processes that can handle increased demands efficiently. In AI, this may include optimizing algorithms, utilizing cloud resources, and implementing distributed computing architectures to ensure scalability without compromising performance.

Q13: What is scalability and how do you achieve scalability?

A: Scalability is the ability of a system to handle increased workloads or demands efficiently. Achieving scalability in AI involves strategies like leveraging cloud computing, implementing distributed computing architectures, optimizing algorithms, and adopting technologies like containerization and automated machine learning.

Q14: What is scalability strategy?

A: A scalability strategy involves the deliberate planning and implementation of measures to ensure that a system or application can efficiently handle increased workloads. In the context of AI, scalability strategies may include leveraging cloud resources, optimizing algorithms, and adopting technologies that enhance overall system scalability.

Q15: What is scalability in machine learning?

A: Scalability in machine learning refers to the capacity of a machine learning system to handle larger datasets, increased complexity, and growing computational demands. Achieving scalability in machine learning involves implementing strategies like parallel processing, optimizing algorithms, and utilizing scalable computing resources.

The Informed Minds

I'm Vijay Kumar, a consultant with 20+ years of experience specializing in Home, Lifestyle, and Technology. From DIY and Home Improvement to Interior Design and Personal Finance, I've worked with diverse clients, offering tailored solutions to their needs. Through this blog, I share my expertise, providing valuable insights and practical advice for free. Together, let's make our homes better and embrace the latest in lifestyle and technology for a brighter future.